Dual-SLAM: A framework for robust single camera navigation

3Institute for Infocomm Research, Singapore 4Sun Yat-San University, China

Abstract

SLAM (Simultaneous Localization And Mapping) seeks to provide a moving agent with real-time self-localization. To achieve real-time speed, SLAM incrementally propagates position estimates. This makes SLAM fast but also makes it vulnerable to local pose estimation failures. As local pose estimation is ill-conditioned, local pose estimation failures happen regularly, making the overall SLAM system brittle. This paper attempts to correct this problem. We note that while local pose estimation is ill-conditioned, pose estimation over longer sequences is well-conditioned. Thus, local pose estimation errors eventually manifest themselves as mapping inconsistencies. When this occurs, we save the current map and activate two new SLAM threads. One processes incoming frames to create a new map and the other, recovery thread, backtracks to link new and old maps together. This creates a Dual-SLAM framework that maintains real-time performance while being robust to local pose estimation failures. Evaluation on benchmark datasets shows Dual-SLAM can reduce failures by a dramatic 88%.

Video

Results

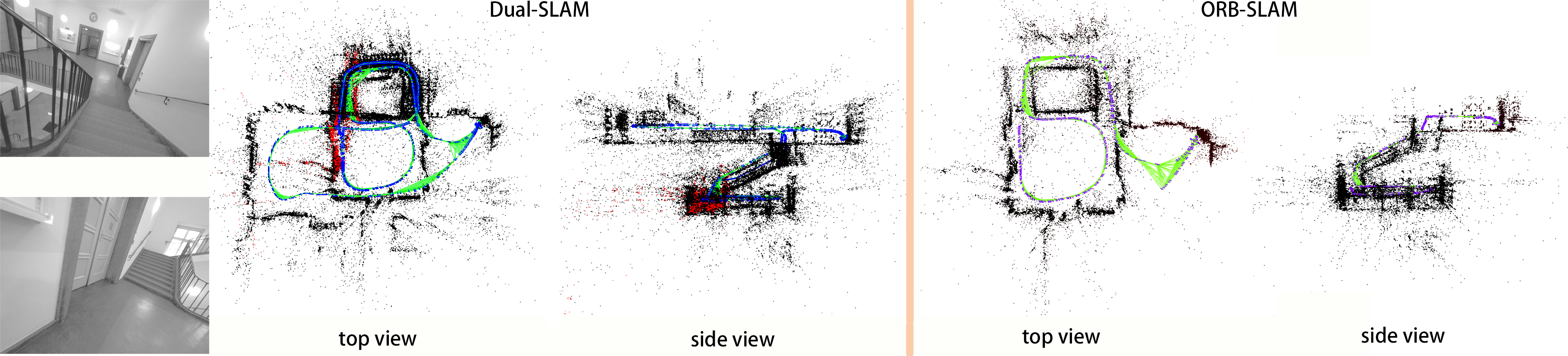

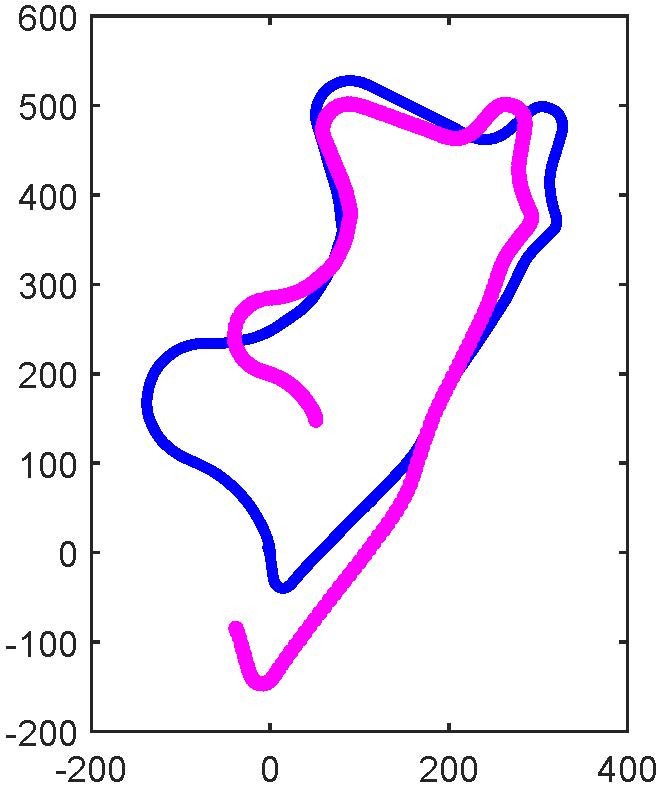

Figure10: Sequence 41 of TUM-Mono dataset. Reconstructed 3D points are shown in black, key-frames in blue, linkages between adjacent frames in green and breakage locations in red. Dual-SLAM is stable on this very difficult sequence, allowing it to recover much more of the map than the original ORB-SLAM.

Citation

@inproceedings{hhuang2020dualslam,

title = {Dual-SLAM: A framework for robust single camera navigation},

author = {Huang, Huajian and Lin, Wen-Yan and Liu, Siying and Zhang, Dong and Yeung, Sai-Kit},

booktitle = {2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

pages={4942--4949},

year = {2020},

organization={IEEE}

}Acknowledgements

This research is supported by the Singapore Ministry of Education (MOE) Academic Research Fund (AcRF) Tier 1 grant and internal grant from HKUST(R9429). We also thank Weibin Li and Miaoxin Huang for their generous help.