360VO: Visual Odometry Using A Single 360 Camera

Huajian Huang and Sai-Kit Yeung

The Hong Kong University of Science and Technology

Abstract

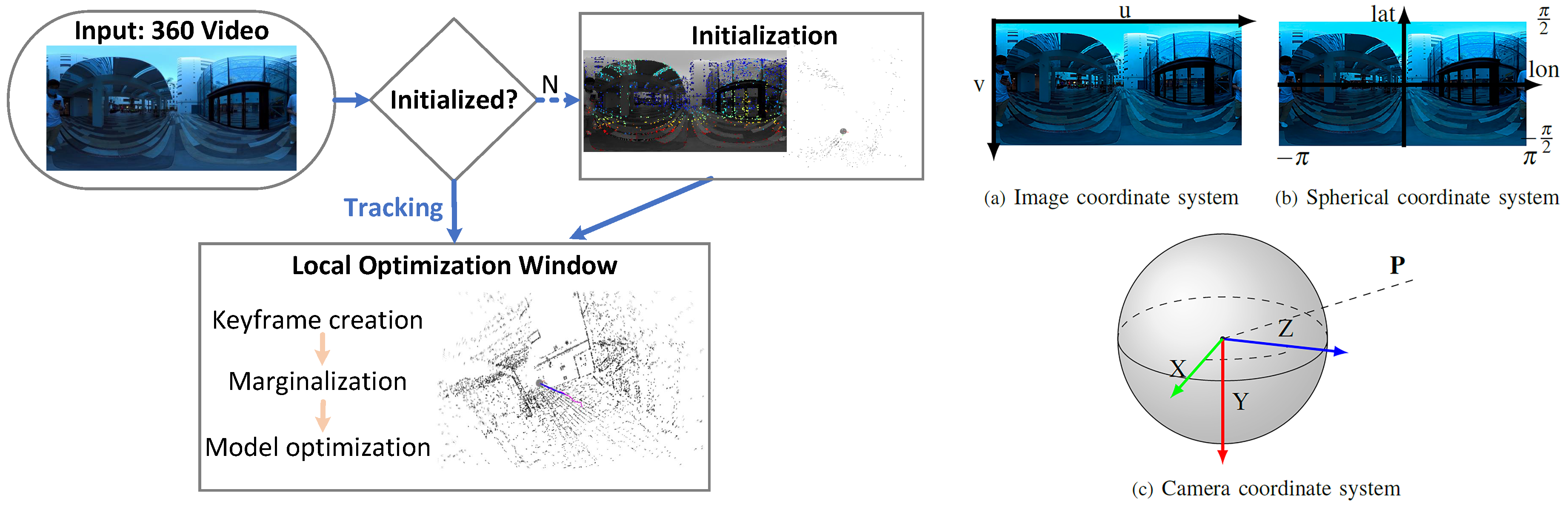

In this paper, we propose a novel direct visual odometry algorithm to take the advantage of a 360-degree camera for robust localization and mapping. Our system extends direct sparse odometry by using a spherical camera model to process equirectangular images without rectification to attain omnidirectional perception. After adapting mapping and optimization algorithms to the new model, camera parameters, including intrinsic and extrinsic parameters, and 3D mapping can be jointly optimized within the local sliding window. In addition, we evaluate the proposed algorithm using both real world and large-scale simulated scenes for qualitative and quantitative validations. The extensive experiments indicate that our system achieves start of the art results.

Video

Results

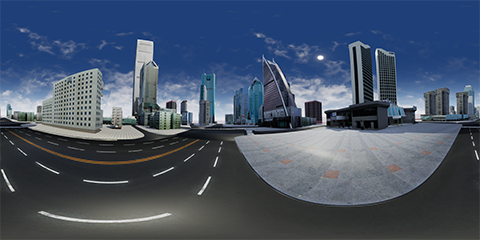

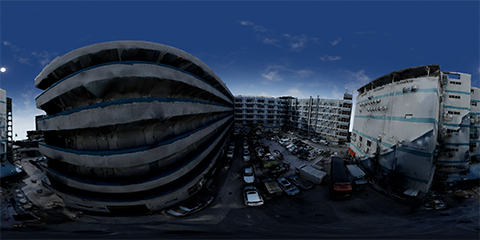

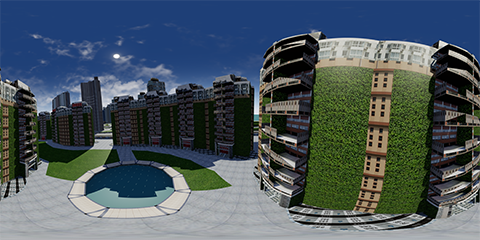

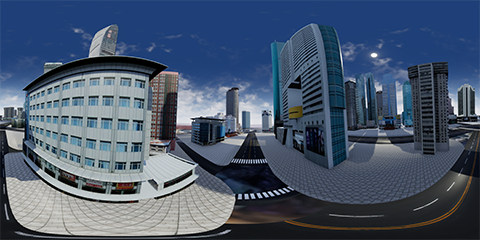

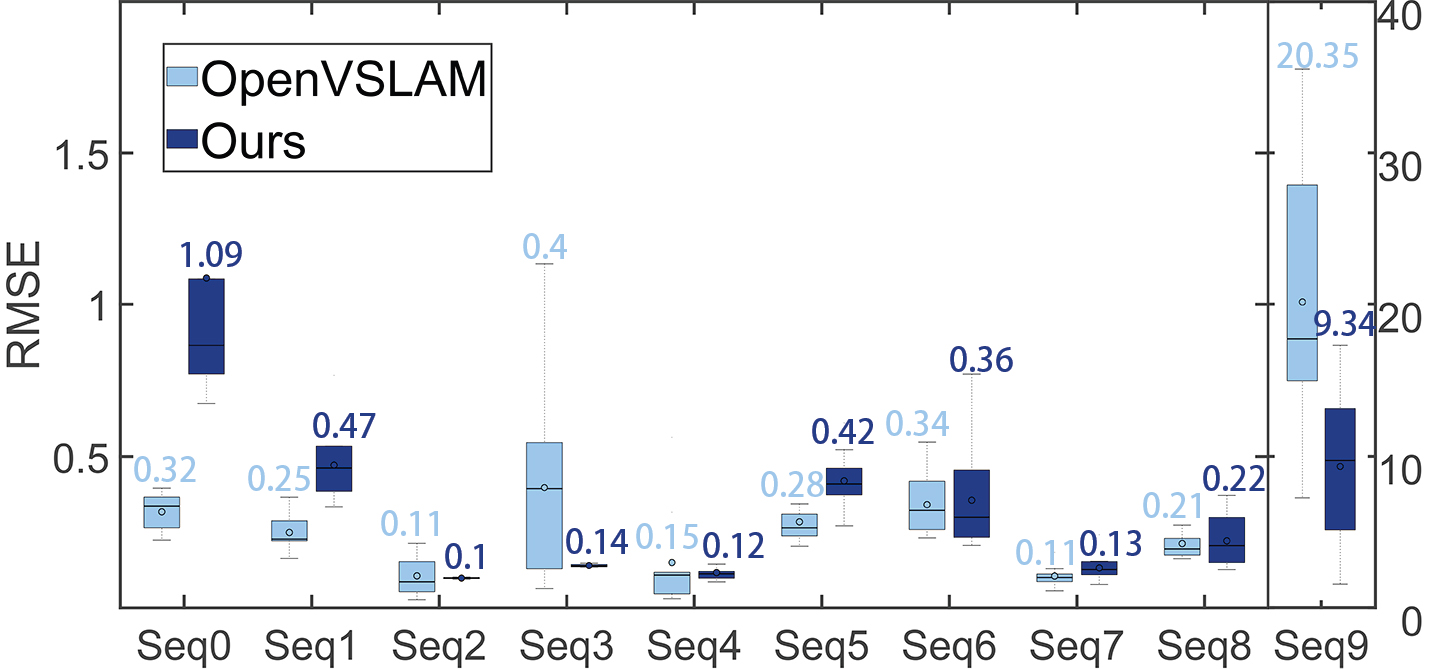

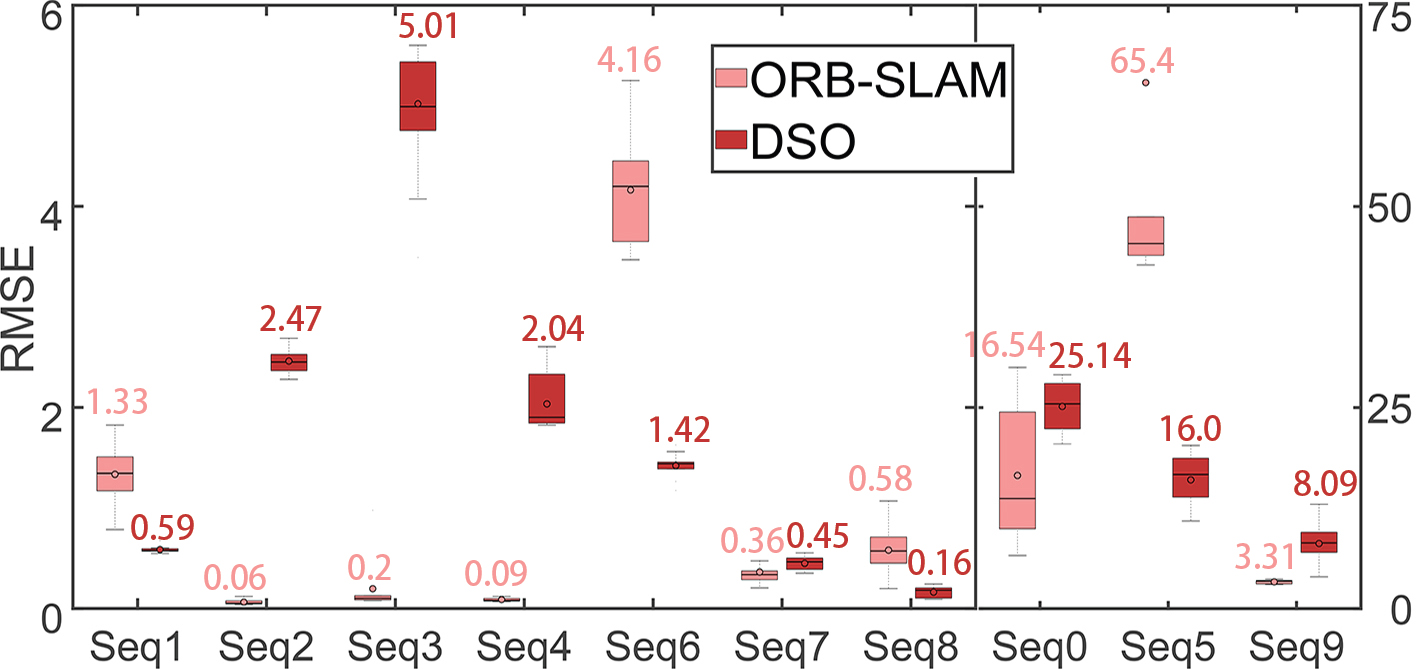

Figure 1. Results on the synthesis dataset. Each sequence is run 10 times, and RMSE(m) of the trajectory is reported. The number at the top of each bar is the mean of RMSE. Ours 360VO achieves comparable results in contrast to OpenVSLAM. In addition, we rectify and crop the 360 images to perspective images of 90ᵒ FOV, and take them as input to run ORB-SLAM and DSO. It is obvious that the methods utilizing 360 camera are commonly more robust and precise.

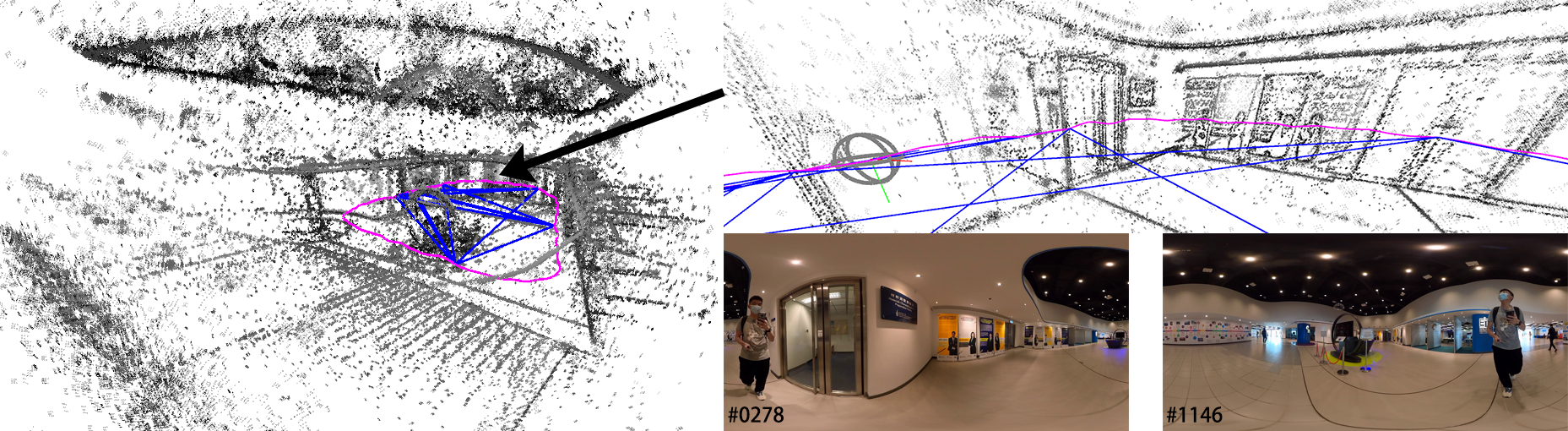

Figure 2. Constraints between activated keyframes in the local optimization window are represented by blue lines, while magenta curve denotes camera trajectory. The gray sphere denotes the current frame's position, while black points denote the 3D map. Since the same landmarks can be observed for a longer period, it has great consistency and low drift.

Comparison to OpenVSLAM

More Results

Citation

@inproceedings{hhuang2022VO,

title = {360VO: Visual Odometry Using A Single 360 Camera},

author = {Huang, Huajian and Yeung, Sai-Kit},

booktitle = {International Conference on Robotics and Automation (ICRA)},

year = {2022},

organization={IEEE}

}Acknowledgements

This research project is partially supported by an internal grant from HKUST (R9429) and the Innovation and Technology Support Programme of the Innovation and Technology Fund (Ref: ITS/200/20FP).